Remember back in 2024 when everyone was arguing about whether AI would take all the coding jobs? Turns out, the robots just needed better tools. Fast forward to February 13th, 2026, and OpenAI dropped a bombshell: GPT-5.3-Codex-Spark, a code-generating AI so sharp it makes Stack Overflow look like a toddler’s crayon drawings. But the real kicker? It’s running on Cerebras Systems’ chips, not Nvidia’s GPUs. Cue the dramatic music.

For years, Nvidia has been the undisputed king of the AI hardware hill. Their GPUs were practically synonymous with deep learning, the engines that powered everything from image recognition to those eerily accurate TikTok ads. OpenAI, the company behind GPT-3, GPT-4, and now this coding whiz kid, was one of Nvidia’s biggest customers. So, what happened? Why the sudden hardware shakeup? It’s a story of ambition, innovation, and maybe, just maybe, a little bit of hardware envy.

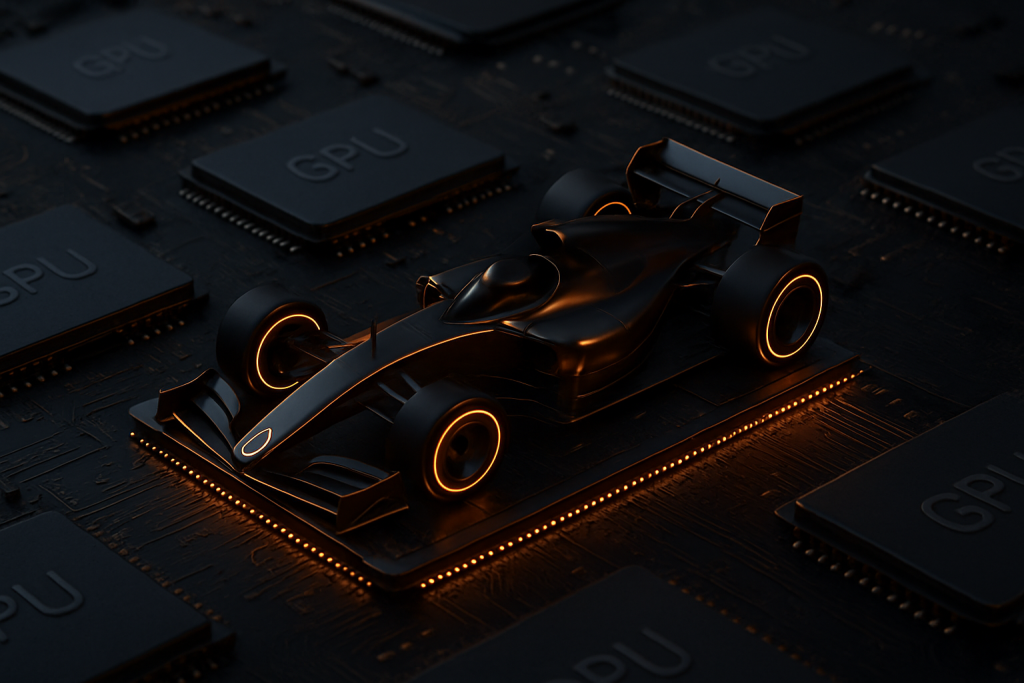

Think of it like this: Nvidia was the reliable minivan, getting the whole AI family where they needed to go. Cerebras, on the other hand, is like a custom-built Formula 1 race car. It’s designed for one thing and one thing only: blazing-fast AI computations. They didn’t just tweak existing silicon; they basically built a giant, single-wafer chip specifically for AI workloads. It’s the kind of thing that makes engineers drool and venture capitalists reach for their checkbooks.

GPT-5.3-Codex-Spark isn’t just another incremental upgrade. It’s built to write code, and write it well. We’re talking about AI that can not only generate basic functions but also understand complex algorithms, debug code, and even translate between different programming languages. Imagine having an AI assistant that can whip up a Python script in the morning, debug your JavaScript in the afternoon, and then write a Solidity contract for your crypto side hustle at night. The implications are huge.

The immediate impact is clear: OpenAI is diversifying its hardware dependencies. They’re not putting all their eggs in one GPU-shaped basket. This gives them leverage in negotiations, potentially reduces costs, and, most importantly, allows them to explore the bleeding edge of AI hardware performance. For Cerebras, this is a massive win. It’s like winning the tech world’s lottery, proving that their specialized chip design can compete with, and potentially outperform, the GPU giants.

But the ripple effects extend far beyond OpenAI and Cerebras. This move signals a broader trend in the AI industry: the rise of specialized silicon. Companies are starting to realize that general-purpose GPUs, while powerful, may not always be the most efficient solution for specific AI tasks. We’re likely to see more companies investing in custom hardware or exploring alternative chip architectures like ASICs (Application-Specific Integrated Circuits) or even neuromorphic computing. The age of AI hardware diversity is upon us.

What does this mean for Nvidia? Well, they’re not going to disappear overnight. They still have a massive lead in the GPU market, and their technology is constantly evolving. But they can’t afford to be complacent. The competition is heating up, and the AI landscape is shifting beneath their feet. They’ll need to innovate faster, offer more specialized solutions, and maybe even consider acquiring some of these up-and-coming hardware startups to stay ahead of the game. Think of it as the AI hardware equivalent of Netflix vs. the streaming hordes.

From a political and regulatory perspective, this shift towards specialized hardware could also have implications. Governments might start scrutinizing the concentration of power in the AI hardware market, potentially leading to antitrust investigations or regulations aimed at promoting competition and preventing monopolies. The global race for AI dominance is already intense, and hardware is a crucial piece of the puzzle.

And then there are the philosophical and ethical considerations. As AI becomes more powerful and more capable of automating complex tasks like code generation, we need to ask ourselves some tough questions. What does it mean to be a programmer in a world where AI can write code faster and more efficiently than humans? How do we ensure that AI-generated code is secure, reliable, and doesn’t perpetuate biases? These are questions that we need to grapple with as AI continues to evolve.

Financially, the impact could be significant. A shift towards specialized AI hardware could create new investment opportunities, disrupt existing markets, and reshape the competitive landscape. Venture capitalists are already pouring money into AI chip startups, and we’re likely to see even more activity in this space as the demand for AI continues to grow. The economic implications of AI are vast and far-reaching, and hardware is a critical enabler of this technological revolution.

In conclusion, OpenAI’s decision to use Cerebras hardware for GPT-5.3-Codex-Spark is more than just a technical footnote. It’s a sign of things to come. It’s a signal that the AI industry is maturing, diversifying, and pushing the boundaries of what’s possible. It’s a reminder that the future of AI is not just about algorithms and data; it’s also about the hardware that powers them. So, buckle up, folks. The AI hardware race is on, and it’s going to be a wild ride.

Discover more from Just Buzz

Subscribe to get the latest posts sent to your email.